Neo-Nazi group the Atomwaffen Division has been linked to several killings and openly promotes a violent, white supremacist ideology. So why is their YouTube channel still online?

Atomwaffen Division hit headlines in recent days after Samuel Woodward, who is thought to be in its ranks, was charged with fatally stabbing college student Blaze Bernstein in January. In an online message group seen by news website ProPublica, members praised Woodward for killing Bernstein, who was a gay, Jewish student. One member of the group called Woodward a "one man gay Jew wrecking crew."

Bernstein's death is one of five murders in the U.S. that the group, which was founded in 2013, has been associated with since May 2017.

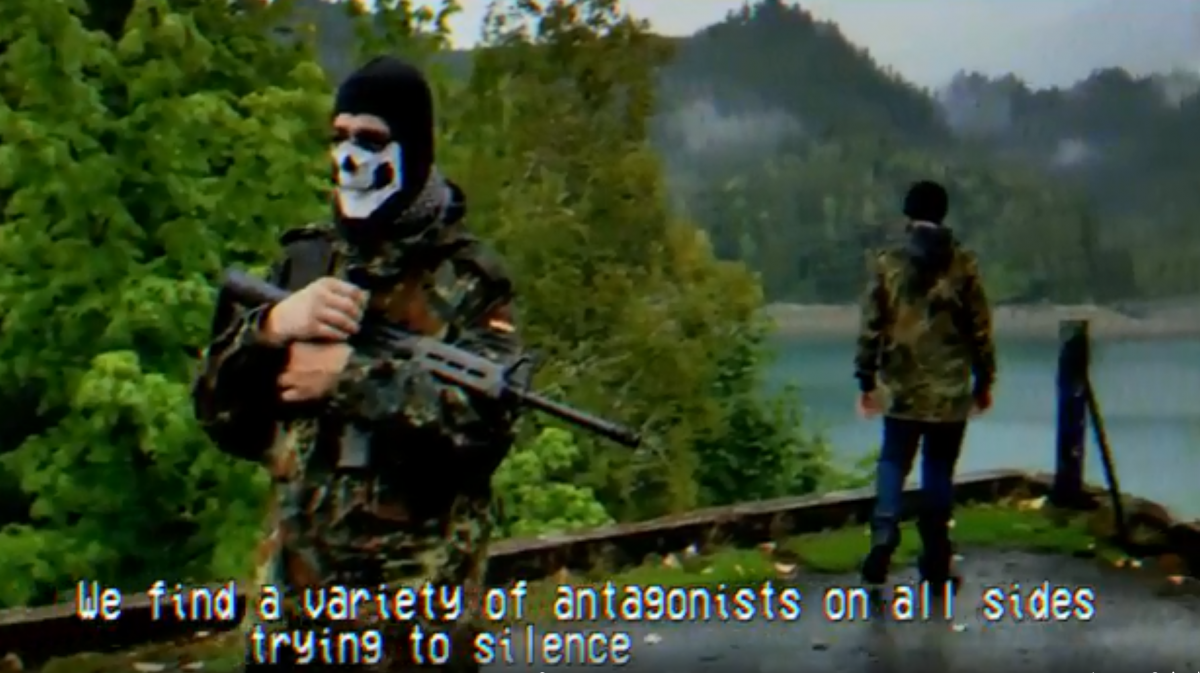

On its website, Atomwaffen Division describes itself as a "Revolutionary National Socialist organization centered around political activism and the practice of an autonomous Fascist lifestyle." Claiming to perform "activism and militant training (hand to hand, arms training, and etc.)" it tells "keyboard warriors" not interested in real-world actions "don't bother [getting involved in the group]." Atomwaffen Division has around 20 cells across the U.S., with the largest in Virginia, Texas and Washington, according to ProPublica.

The group's website directs visitors to its YouTube channel, which earlier this week featured videos that were preceded with a content warning reading: "The following content has been identified by the YouTube community as inappropriate or of offensive to some audiences."

Those included content released in the weeks following Bernstein's death showing members firing semi-automatic weapons and appearing to call for the genocide of Jewish people and non-whites by chanting "gas the kikes. Race war now," the Daily Beast reported.

At the time of writing on Tuesday, the group's official YouTube page and a back-up page that appeared to be linked with it were operating but no longer featured videos.

Online platforms including Twitter have clamped down on accounts run by extremist individuals and organizations—though Atomwaffen Division doesn't appear to have operated on that site—leading some to ask why Atomwaffen Division's YouTube account remains intact.

As a result, the website has found itself answering questions on how it balances its arguable duty to stop the spread of violent ideologies, its commitment to free speech, and how seriously it takes its Community Guidelines, which prohibit hate speech based on attributes including race, ethnic origin, religious and sexual orientation.

When asked by Newsweek whether YouTube plans to remove Atomwaffen Division's videos and other far-right, extremist and neo-Nazi content, a spokesperson from YouTube did not comment on specifics.

"We announced last June that we would be taking a tougher stance on videos that are borderline against our policies on hate speech and violent extremism by putting them behind a warning interstitial and removing certain features, such as recommended videos and likes," the spokesperson said, adding: "We believe this approach strikes a good balance between allowing free expression and limiting affected videos' ability to be widely promoted on YouTube."

The site's users can flag videos that they find offensive or think break the website's guidelines. Accounts highlighted repeatedly risk having their channel terminated.

The platform's spokesperson did not immediately respond on Tuesday when asked if the Atomwaffen Division's videos were removed by the user or it they violated YouTube's policies.

Gregory Magarian, free speech expert and Professor of Law at Washington University in St. Louis, told Newsweek that he wants private intermediaries to take free speech principles seriously.

Arguing that it is better to have bad ideas in plain view than operating in secret, he countered that Atomwaffen Division's implication in several murders is a "valid bright line for [YouTube] banning them."

Although YouTube isn't bound by the First Amendment, private intermediaries like it increasingly determine what people see and hear, he said. "Five murders are more than a threat – they're action, a threat realized. I think it's obvious that we should be concerned that this group will commit more violent acts."

Atomwaffen is but one example of a complex, marginalized neo-Nazi culture in America that is set across a wide range of groups, from the National Socialist Movement to the Daily Stormer, chimed Paul Jackson, far-right expert and lecturer in history at the U.K.-based University of Northampton.

Websites need to think carefully about what they are offering such groups and why by allowing them to use their services, he told Newsweek.

Accusing tech companies of interpreting free speech principles in simplistic ways, he said: "YouTube are under no obligation to allow such groups to promote their extremist and racist material." And if more cultural pressure was put on such sites to take responsibility for the media disseminated on their platforms they could feel obliged to remove more material, in order to protect their mainstream brand identity, he added.

One such organization that has already distanced itself from extremists is AppNexus, the operator of one of the world's biggest digital advertising services. In 2016, it blocked right-wing website Breitbart News from using its ad-serving tool citing hate speech.

Brian O'Kelley, CEO and founder of AppNexus, told Newsweek, "There is a sharp distinction between a government stifling free speech and a private company declining to do business with a particular individual or group of individuals."

Referring to YouTube's parent site Google, he stressed: "I don't think most Google employees agree with or like this content. But the problem is their business model.

"The company is making money by packaging a little bit of good content with a lot of low-quality, user-generated content and then selling adverts. That low-quality content can come in the form of amusing cat videos. But some of it will invariably be objectionable."

"I'd like to see advertisers reward real publishers by shifting their budgets to quality content," he said, adding: "It's time for YouTube and its parent company to grow up and decide what they really want to be."

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

About the writer

Kashmira Gander is Deputy Science Editor at Newsweek. Her interests include health, gender, LGBTQIA+ issues, human rights, subcultures, music, and lifestyle. Her ... Read more

To read how Newsweek uses AI as a newsroom tool, Click here.