A realtor sends a prospective homebuyer a blurry photograph of a house taken from across the street. The homebuyer can compare it to the real thing — look at the picture, then look at the real house — and see that the bay window is actually two windows close together, the flowers out front are plastic and what looked like a door is actually a hole in the wall.

What if you aren’t looking at a picture of a house, but something very small — like a protein? There is no way to see it without a specialized device so there’s nothing to judge the image against, no “ground truth,” as it’s called. There isn’t much to do but trust that the imaging equipment and the computer model used to create images are accurate.

Now, however, research from the lab of Matthew Lew at the McKelvey School of Engineering at Washington University in St. Louis has developed a computational method to determine how much confidence a scientist should have that their measurements, at any given point, are accurate, given the model used to produce them.

The research was published Dec. 11 in Nature Communications.

“Fundamentally, this is a forensic tool to tell you if something is right or not,” said Lew, assistant professor in the Preston M. Green Department of Electrical & Systems Engineering. It’s not simply a way to get a sharper picture. “This is a whole new way of validating the trustworthiness of each detail within a scientific image.

“It’s not about providing better resolution,” he added of the computational method, called Wasserstein-induced flux (WIF). “It’s saying, ‘This part of the image might be wrong or misplaced.’”

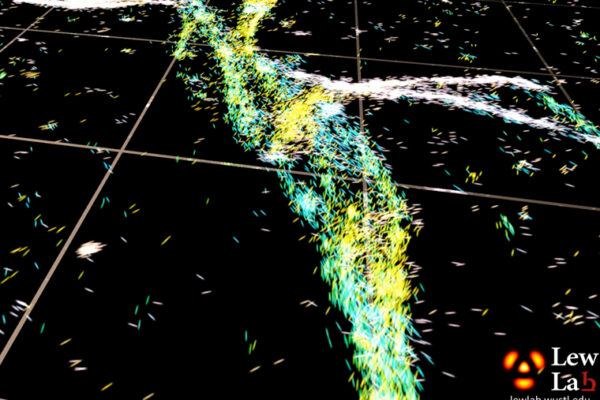

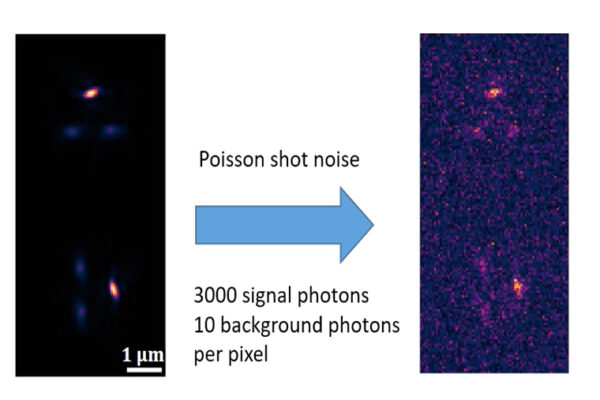

The process used by scientists to “see” the very small — single-molecule localization microscopy (SMLM) — relies on capturing massive amounts of information from the object being imaged. That information is then interpreted by a computer model that ultimately strips away most of the data, reconstructing an ostensibly accurate image — a true picture of a biological structure, like an amyloid protein or a cell membrane.

There are a few methods already in use to help determine whether an image is, generally speaking, a good representation of the thing being imaged. These methods, however, cannot determine how likely it is that any single data point within an image is accurate.

Hesam Mazidi, a recent graduate who was a PhD student in Lew’s lab for this research, tackled the problem.

“We wanted to see if there was a way we could do something about this scenario without ground truth,” he said. “If we could use modeling and algorithmic analysis to quantify if our measurements are faithful, or accurate enough.”

The researchers didn’t have ground truth — no house to compare to the realtor’s picture — but they weren’t empty handed. They had a trove of data that is usually ignored. Mazidi took advantage of the massive amount of information gathered by the imaging device that usually gets discarded as noise. The distribution of noise is something the researchers can use as ground truth because it conforms to specific laws of physics.

“He was able to say, ‘I know how the noise of the image is manifested, that’s a fundamental physical law,’” Lew said of Mazidi’s insight.

“He went back to the noisy, imperfect domain of the actual scientific measurement,” Lew said. All of the data points recorded by the imaging device. “There is real data there that people throw away and ignore.”

Instead of ignoring it, Mazidi looked to see how well the model predicted the noise — given the final image and the model that created it.

Analyzing so many data points is akin to running the imaging device over and over again, performing multiple test runs to calibrate it.

“All of those measurements give us statistical confidence,” Lew said.

WIF allows them to determine not if the entire image is probable based on the model, but, considering the image, if any given point on the image is probable, based on the assumptions built into the model.

Ultimately, Mazidi developed a method that can say with strong statistical confidence that any given data point in the final image should or should not be in a particular spot.

It’s as if the algorithm analyzed the picture of the house and — without ever having seen the place — it cleaned up the image, revealing the hole in the wall.

In the end, the analysis yields a single number per data point, between -1 and 1. The closer to one, the more confident a scientist can be that a point on an image is, in fact, accurately representing the thing being imaged.

This process can also help scientists improve their models. “If you can quantify performance, then you can also improve your model by using the score,” Mazidi said. Without access to ground truth, “it allows us to evaluate performance under real experimental conditions rather than a simulation.”

The potential uses for WIF are far-reaching. Lew said the next step is to use it to validate machine learning, where biased datasets may produce inaccurate outputs.

How would a researcher know, in such a case, that their data was biased? “Using this model, you’d be able to test on data that has no ground truth, where you don’t know if the neural network was trained with data that are similar to real-world data.

“Care has to be taken in every type of measurement you take,” Lew said. “Sometimes we just want to push the big red button and see what we get, but we have to remember, there’s a lot that happens when you push that button.”