The first medical diagnostic image was taken in 1896 using an X-ray — a phenomenon discovered just a year earlier. The X-ray provided a snapshot of suspected broken bones in the wrist of a young boy. This innovation — the wild idea that doctors could see inside a body — kicked off a transformation in medicine. Since the days of those first grainy, black-and-white images, imaging scientists have created new tools and techniques to visualize the human body in ever-more-intricate detail. Today, imaging is used for everything from mapping the network of connections within the brain to diagnosing cancer to monitoring the development of a beating heart.

Washington University physicians and researchers at the School of Medicine and engineers on the Danforth Campus have been at the forefront of imaging science for more than 125 years.

The university’s first hospital was among the early leaders in adopting X-ray technology and teaching it to students. In the 1920s, Washington University researchers were the first to use X-rays with a contrast agent to view the gallbladder, making it safer and easier to diagnose gallbladder disease and paving the way for the development of contrast agents to image other organs.

In 1931, Mallinckrodt Institute of Radiology (MIR) at the School of Medicine was established to provide imaging services to support patient care and to develop the science of imaging. In the 1970s, research by Michel Ter-Pogossian, PhD, and Michael E. Phelps, PhD, at MIR led to the development of positron emission tomography (PET) and the first PET scanner. Their colleagues, Michael J. Welch, PhD, and Marcus E. Raichle, MD, developed tools and algorithms to use PET to study the brain. Welch developed an oxygen-based PET tracer to measure brain blood flow and metabolism, and Raichle captured some of the first snapshots of the brain at work. Welch also developed similar PET tracers for other parts of the body, laying the foundation for PET to emerge as a critical tool in biomedical research and clinical imaging. PET scans now are used worldwide to detect cancer, heart disease, brain disorders and other conditions. Ter-Pogossian also helped develop the first full-body computed tomography (CT) scanner, and Washington University became home to one of the first three in the world.

In recent decades, university researchers have continued pushing the boundaries of imaging science, developing new ways to use imaging to diagnose and treat disease as well as study biological structures, metabolism and physiology, and critical molecular and cellular processes. To name just a few accomplishments, WashU researchers created a new kind of microscope that enables rapid imaging of tens of thousands of neurons in 3D; developed investigational high-tech goggles that, when used with a special dye, illuminate cancer cells to help surgeons identify and remove all cancerous tissue; created a new imaging modality that combines ultrasound with near-infrared imaging to diagnose breast and ovarian cancers, a technique that is now in clinical trials; and applied deep machine learning to salvage useful information from error-riddled images. And five years ago, the McKelvey School of Engineering, in collaboration with schools across the university, launched an interdisciplinary doctoral program in imaging science — one of only two in the United States to train the next generation of leaders who will push the field forward in revolutionary ways.

The following vignettes showcase some advances in imaging occurring now across WashU.

Preterm labor

Protecting the health of babies and pregnant women

About 1 million children worldwide die annually from complications of preterm birth. Those who survive are at risk of a lifetime of poor health, including learning and developmental disabilities, vision and hearing impairments, and problems with brain and lung function.

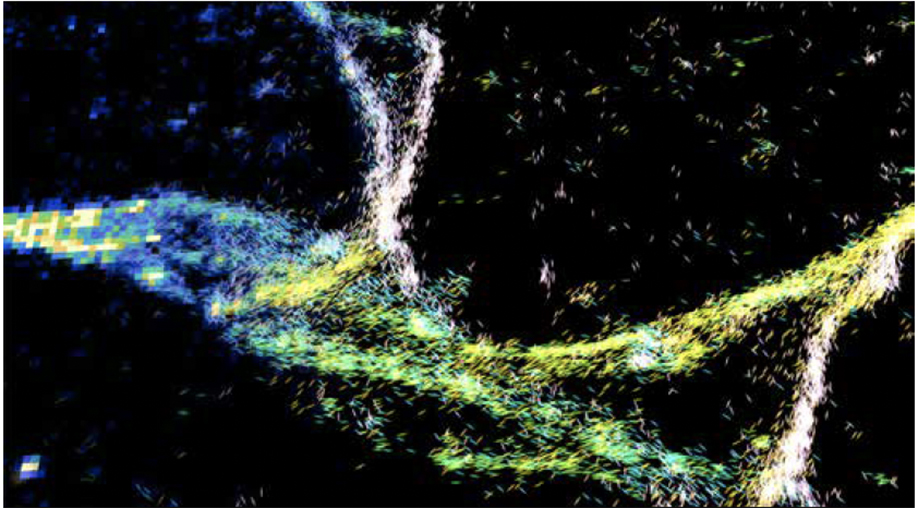

Preterm labor has many causes, but one common feature is abnormal uterine contractions, says Yong Wang, PhD, an obstetrics and gynecology researcher and associate professor at the medical and engineering schools. “Understanding preterm contractions is the key to preventing premature birth and protecting the health of babies and pregnant women,” he says.

Inspired by electrocardiogram imaging of the heart, Wang invented electromyometrium imaging of the uterus, which maps each contraction in 3D as an electrical wave passing over the uterus. Using this imaging, Wang discovered that contractions are of different types, and some types may indicate problems. Such imaging could be used during labor to help doctors detect — and treat — complications early.

With the aid of two grants from the Gates Foundation, Wang is working to make uterine mapping technology available worldwide. He has adapted the technology for resource-limited settings by replacing expensive MRI scans with ultrasounds, and wire electrodes with printed ones, and he plans to begin testing soon. Wang is also working with electrical & systems engineers Chuan Wang, PhD, an assistant professor, and Shantanu Chakrabartty, PhD, the Clifford W. Murphy Professor, both in the McKelvey School of Engineering, to incorporate ultrathin, soft sensors into a wireless, wearable system to monitor the uterine health of pregnant and non-pregnant women.

Alzheimer’s

Predicting dementia

Alzheimer’s disease starts silently. Two decades or more before symptoms appear, people start sustaining small but relentless assaults on their brains. By the time confusion and memory problems emerge, their brains are severely — possibly irreparably — damaged. Washington University researchers are working on improving diagnosis and management of Alzheimer’s by using brain imaging to detect the disease before symptoms arise.

In the early 2000s, radiologist Tammie L.S. Benzinger, MD, PhD, a professor of radiology at the university’s MIR, pioneered the use of PET brain scans targeted against the Alzheimer’s protein amyloid beta to identify early signs of the disease. Recently, neurologist Suzanne Schindler, MD, PhD, designed an algorithm to use data from a single amyloid PET scan, plus a person’s age, to estimate how far a person has progressed toward dementia — and how much time is left before cognitive impairment sets in.

PET imaging is powerful but not accessible to all. Dmitriy Yablonskiy, PhD, a radiology researcher and professor at MIR, has developed a novel way to extract evidence of subtle brain damage from more accessible MRI scans. This method can identify brain areas that are no longer functioning due to a loss of healthy neurons, an indication of Alzheimer’s-related brain damage detectable before symptoms arise.

Radiotracers

Tracing disease

PET imaging can reveal subtle signs of disease undetectable by other methods. It relies on radioactive imaging agents, or tracers, that are injected, inhaled or swallowed, depending on the organ under study. When tracers find their target, they show up as bright spots on scans that can be measured, constituting a powerful tool for diagnosing disease, monitoring treatment effectiveness and individualizing therapy.

MIR, part of the School of Medicine, is one of the few research institutions in the country equipped to design, develop, evaluate and translate new PET tracers for use in people. MIR-developed tracers already in clinical use include one to determine cognitive function in patients with Alzheimer’s, Parkinson’s and other dementias (created by a team led by Zhude “Will” Tu, PhD, a professor of radiology) and another that detects unstable blood vessel plaques prone to causing heart attacks and strokes (developed by a team led by Pamela K. Woodard, MD, the Hugh Monroe Wilson Professor of Radiology). Tracers targeting many aspects of inflammation — a complex process that plays a role in a variety of conditions — are under development at MIR for multiple sclerosis, atherosclerosis, liver and lung injury, diabetes, cancer, rheumatoid arthritis, inflammatory bowel disease and more.

![Red, yellow and green spots on a series of digital “slices” of a living 41-year-old woman’s brain reveal that the PET radiotracer [18F]-VAT has found its target deep in the center of the brain. The tracer was developed by researchers at MIR to detect early signs of dementia. This PET scan is normal, indicating a healthy brain. The colored areas would be smaller in the brain of a patient with dementia. (Credit: Zhude Tu, PhD, and Joel Perlmutter, PhD)](https://source.wustl.edu/app/uploads/2022/11/Dec-22_Feature-2_f18VAT_human_brain.jpg)

Biophotonics

Monitoring the brain

Joseph P. Culver, PhD, the Sherwood Moore Professor of Radiology at MIR, uses light to peer inside people’s heads. Recently, he used high-density diffuse optical tomography (HD-DOT) — a noninvasive, wearable, light-based brain imaging technology he invented in 2007 — to detect activity in the visual part of a person’s brain, and then he decoded the activity to determine what the person saw. Decoding vision is a step toward decoding language, and toward Culver’s ultimate goal of creating HD-DOT–based communication aids for people with disabilities.

Culver’s former trainee, Adam Eggebrecht, PhD, an assistant professor of radiology at MIR, is exploring the technology’s potential for preserving the brain health of hospitalized patients.

Using portable bedside imaging units, he is undertaking feasibility studies involving infants either undergoing surgery or on heart-lung machines. The goal is to determine whether continuously monitoring the brain with HD-DOT can help doctors catch signs of failing brain health early enough to intervene and prevent permanent damage.

HD-DOT is a research tool now, but Eggebrecht and Culver are part of a team, including Jason Trobaugh, DSc, and Ed Richter, MS, both professors of practice in electrical & systems engineering at McKelvey, to develop and commercialize wearable versions of the technology with the help of a Small Business Technology Transfer grant from the National Institutes of Health (NIH).

“Imaging and qualitative imaging are critically important ways to individualize therapy and get the right treatment to the proper patient at the right time.”

Richard L. Wahl, MD, head of the department of radiology, director of the university’s Mallinckrodt Institute of Radiology and the Elizabeth E. Mallinckrodt Professor

Photonics

Swapping out electrons for photons

Traditional electronics rely on, well, electrons to transport information through a system and present it in the form of, say, a GIF on your cellphone. But electrons are fickle. They are affected by electromagnetic fields, or even other electrons.

Lan Yang, PhD, the Edwin H. & Florence G. Skinner Professor in the Preston M. Green Department of Electrical & Systems Engineering in the McKelvey School of Engineering, has moved on. Instead of using electrons, her field of photonics uses photons, individual packets of light, as the fundamental unit of information for everything from environmental sensors to communications relays to quantum computing. Photons don’t interact with themselves, they’re unfazed by electromagnetic fields, and they are faster — much faster — than electrons.

In 2018, Yang’s lab took a drone-mounted photonic sensor to Forest Park. There, they became the first to successfully take an environmental measurement — temperature — using a wireless photonic sensor resonator with a special design, known as whispering gallery mode. In 2020, they took advantage of another special property of light to make another breakthrough.

Under some circumstances and with the right interference patterns, light can pass through opaque media. Her team devised a fully contained optical resonator system that can be used to turn transparency on and off. It can also slow the speed of light, giving people more time to send, edit, encrypt and recover communications.

Yang’s team was the first to demonstrate that their resonators could detect and measure single nanoparticles, a potentially revolutionary technology for health care, thanks to this level of sensitivity, which could detect foreign substances or irregularities in much smaller amounts than is currently possible.

Improving MRIs

Machine learning systems help MRI machines deliver cleaner images

Phones, computers, cars and even doorbells are getting better all the time. And they aren’t improving only because the hardware is getting better; the software, too, is driving innovation.

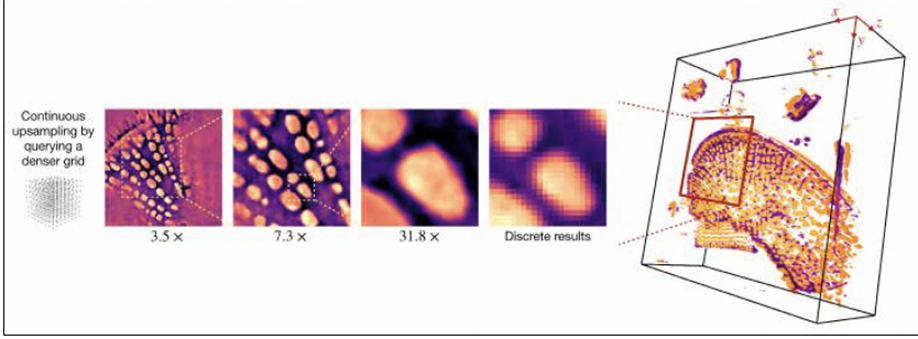

Work from the lab of Ulugbek Kamilov, PhD, assistant professor in the Preston M. Green Department of Electrical & Systems Engineering and in the Department of Computer Science & Engineering, is interdisciplinary by nature. And a machine learning system developed by Kamilov could allow other labs — chemists and biologists — to upgrade their standard microscopes to be able to create continuous 3D models from regular, 2D pictures.

With his second SciAlog award, Kamilov also continues to work on combining MRI technology with fluorescence imaging to provide neuroscientists the ability to see brain development in action, for better understanding of the genesis of neurodevelopmental disorders.

And for the anxious among us, good news! Kamilov has collaborated with faculty from the School of Medicine to develop software that improves an MRI machine’s ability to deliver a clean image using less-than-perfect data from, for instance, a patient who doesn’t remain absolutely still during a scan. Modern MRIs can be updated with this software as easily as a home computer.

A through line of much of this work is the ability to improve techniques using machine learning systems that can work with a limited amount of training data. Many of his algorithms can learn from the very samples they are trying to interpret. This could be of tremendous use in the field of personalized medicine, where the subject of each image truly is unique.

While it may seem fantastical that a computer can be trained on a thing to better understand that very thing, Kamilov has also begun to work out how it’s possible that they do. (One project Kamilov is working on is applying these algorithms to the big data of fancy microscopes.)

Ethics

Using AI in imaging?

Unlike traditional photography, complex imaging techniques rely on more than light to create a picture; they also rely on interpretation — by a computer.

Artificial intelligence algorithms translate data gathered by an imaging device into a picture. However, there is a peculiar thing about AI: The way it performs this translation is inaccessible to even the people who developed it.

During training, for example, one AI looking for tumors was overclassifying malignancies. It turned out that there happened to be measuring rulers in most of the images it was trained with, so it was more likely to classify any image with a ruler as malignant.

Abhinav Jha, PhD, assistant professor in biomedical engineering at McKelvey and assistant professor of radiology at MIR, designs algorithms for use in imaging, but he also thinks about the evaluation and the ethics of depending on such opaque systems, particularly in health care. Recently, he led a team of computational imaging scientists, physicians, physicists, biostatisticians and representatives from industry and regulatory agencies to develop a framework for best practices for evaluating AI in nuclear medicine. The guidelines they proposed, referred to as RELAINCE guidelines, were published in the Journal of Nuclear Medicine.

He has also worked across a considerable academic divide, partnering with Anya Plutynski, an associate professor of philosophy in Arts & Sciences. They asked patients how they felt about AI in medicine. In his NIH R01 grant, Jha is working on developing methods that can be used to quantify the uncertainty of AI algorithms when used to estimate quantitative metrics. Jha, Plutynski and collaborators surveyed patients and found most respondents were OK with AI — not as a decision maker, but as a tool that physicians use to make final decisions. Their research was published in Nature Medicine.

Multimodal imaging

Pairing technologies for better cancer diagnoses

Gathering infrared light is a sensitive way to map out tumor functionality. Combined with ultrasound, tumor functional and morphology information can be used together to improve the accuracy of a cancer diagnosis. Quing Zhu, PhD, the Edwin H. Murty Professor of Engineering in biomedical engineering, has for decades been pushing the envelope when it comes to cancer diagnosis by taking advantage of both modalities at the same time.

To reduce breast biopsies, Zhu, who is also a professor of radiology in the School of Medicine, is collaborating with radiologists, led by Debbie Bennett, MD, associate professor of radiology and chief of breast imaging at MIR, and Steven Poplack, MD, professor of radiology at Stanford Health. They use ultrasound to locate a tumor and optical sensors to obtain pictures of hemoglobin concentration, which is associated with cancer risk. Initial data show the technology can reduce benign biopsies by more than 20% while not missing any cancers.

On the ovarian cancer front, Zhu’s team is collaborating with a team of radiologists led by Cary Siegel, MD, professor of radiology, and a team of surgeons led by Matthew Powell, MD, professor of obstetrics and gynecology. They use co-registered ultrasound and photoacoustic imaging to accurately assess ovarian lesion risk and reduce unnecessary surgeries.

Zhu’s team is taking on colorectal cancer as well, collaborating with a team of colorectal surgeons led by Matthew Mutch, MD, professor of surgery. Together, they’re exploring the co-registered ultrasound and photoacoustic imaging paired with AI in assessing rectal cancer treatment response. In process now, Zhu’s team is testing the pairing of optical coherence technology with AI to improve the accuracy of colon cancer diagnosis.

“In engineering, we are giving algorithms and instruments the powers of quantitative analysis and decision making. The forefront of imaging has moved beyond making pretty pictures, regardless of modality. The PhD in Imaging Science has and will continue to attract students who are open to innovating the whole of imaging — from probing targets and manipulating light to diagnosing disease — in new ways.”

Matthew Lew, PhD, associate professor of electrical & systems engineering